Top 10 Data Analytics Tools for Every Business

Top 10 Data Analytics Tools for Every Business

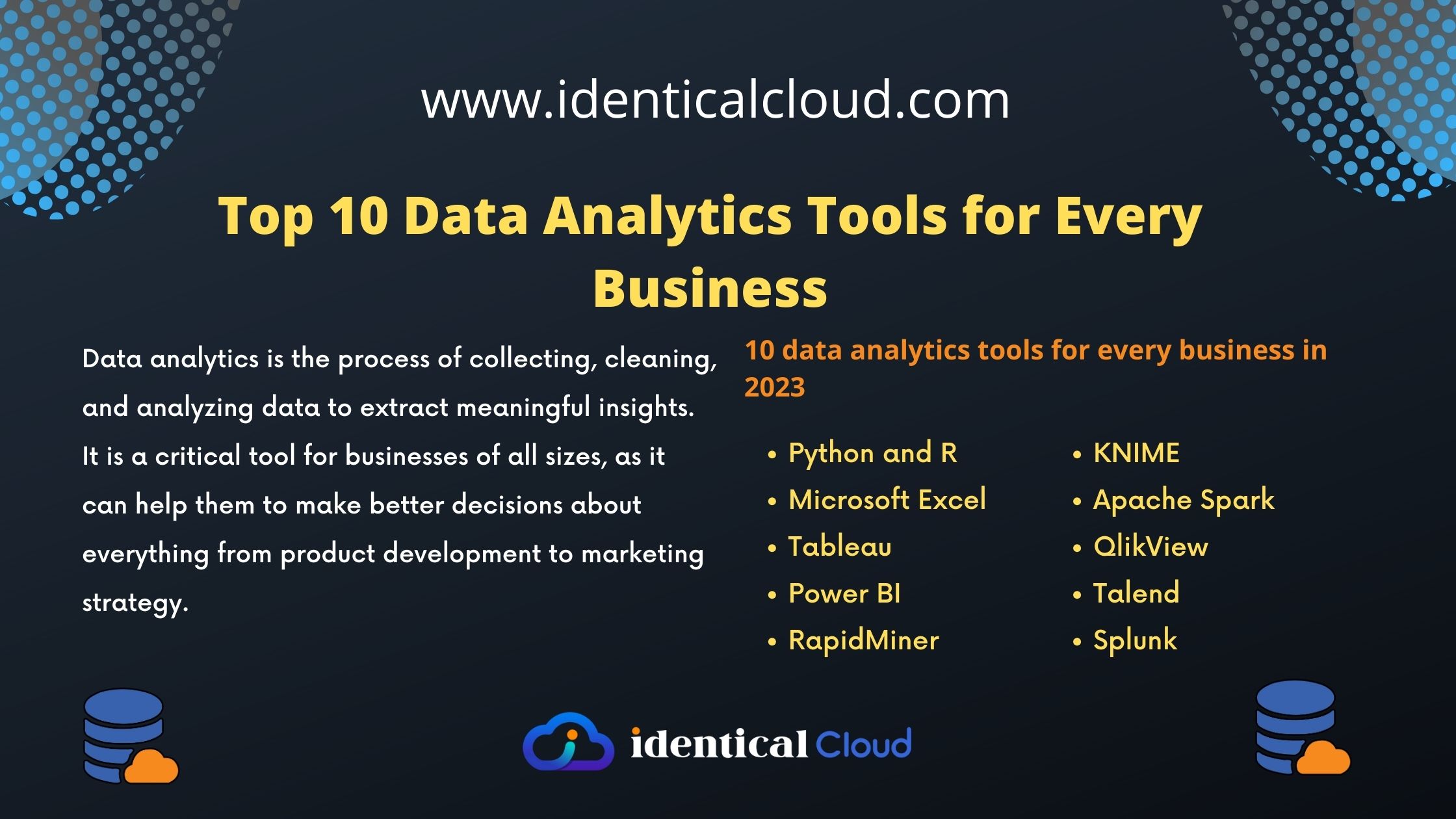

Data analytics is the process of collecting, cleaning, and analyzing data to extract meaningful insights. It is a critical tool for businesses of all sizes, as it can help them to make better decisions about everything from product development to marketing strategy.

There are a wide variety of data analytics tools available, each with its own strengths and weaknesses. Choosing the right tool for your business will depend on your specific needs and budget.

Here is a list of the top 10 data analytics tools for every business in 2023:

Python and R

Python and R are two of the most popular programming languages for data analytics. They are both open-source and offer a wide range of libraries and tools for data manipulation, visualization, and machine learning.

Python is a general-purpose programming language that is easy to learn and use. It is a good choice for beginners and experienced programmers alike. Python has a large community of users and developers, and there are many resources available online and in libraries to help you learn how to use Python.

R is a statistical programming language that is designed for data analysis and visualization. It is a good choice for users with a background in statistics or mathematics. R has a large community of users and developers, and there are many resources available online and in libraries to help you learn how to use R.

Advantages of using Python and R for data analytics:

- Powerful and versatile: Python and R are both powerful and versatile programming languages that can be used for a wide range of data analytics tasks.

- Open-source: Python and R are both open-source programming languages, which means that they are free to use and distribute.

- Large community: Python and R both have large communities of users and developers, which means that there are many resources available online and in libraries to help you learn how to use them.

Popular Python and R libraries for data analytics:

- Python: NumPy, Pandas, scikit-learn, Matplotlib, Seaborn

- R: dplyr, tidyr, ggplot2, caret

Use cases for Python and R in data analytics:

- Data cleaning and preparation: Python and R can be used to clean and prepare data for analysis. This includes tasks such as removing duplicate rows, handling missing values, and converting data types.

- Exploratory data analysis: Python and R can be used to perform exploratory data analysis (EDA). This includes tasks such as summarizing data, visualizing data, and identifying patterns and trends.

- Statistical analysis: Python and R can be used to perform a wide range of statistical analysis tasks, such as hypothesis testing, regression analysis, and time series analysis.

- Machine learning: Python and R can be used to develop and deploy machine learning models. Machine learning models can be used to make predictions, classify data, and identify clusters.

Microsoft Excel:

Excel is a spreadsheet program that is widely used for data analysis. It offers a variety of features for data cleaning, visualization, and statistical analysis.

Advantages of using Microsoft Excel for data analytics:

- Widely used: Excel is one of the most widely used software programs in the world, which means that it is easy to find people who are familiar with Excel and can help you with your data analysis.

- Easy to use: Excel is relatively easy to use, even for people who do not have a background in programming.

- Versatile: Excel can be used for a wide range of data analysis tasks, from basic data cleaning and visualization to more complex statistical analysis.

- Affordable: Excel is included in the Microsoft Office suite, which is relatively affordable.

Popular Excel features for data analytics:

- Data tables: Excel data tables can be used to perform a variety of statistical analysis tasks, such as hypothesis testing, regression analysis, and time series analysis.

- Pivot tables: Pivot tables can be used to summarize and analyze large datasets.

- Charts and graphs: Excel offers a variety of charts and graphs that can be used to visualize data.

- Formulas and functions: Excel offers a variety of formulas and functions that can be used to perform mathematical and statistical calculations.

Use cases for Microsoft Excel in data analytics:

- Data cleaning and preparation: Excel can be used to clean and prepare data for analysis. This includes tasks such as removing duplicate rows, handling missing values, and converting data types.

- Exploratory data analysis (EDA): Excel can be used to perform EDA. This includes tasks such as summarizing data, visualizing data, and identifying patterns and trends.

- Statistical analysis: Excel can be used to perform a variety of statistical analysis tasks, such as hypothesis testing, regression analysis, and time series analysis.

- Data visualization: Excel can be used to create a variety of charts and graphs to visualize data.

Tableau:

Tableau is a data visualization tool that allows users to create interactive dashboards and reports. It is easy to use and does not require any programming knowledge.

Advantages of using Tableau for data analytics:

- Easy to use: Tableau is very easy to use, even for people with no prior experience with data visualization. It has a drag-and-drop interface that makes it easy to create dashboards and reports.

- Powerful: Tableau is a powerful tool that can be used to create complex data visualizations. It offers a wide range of features, such as filters, calculated fields, and parameters.

- Interactive: Tableau dashboards and reports are interactive, which means that users can explore the data and drill down into specific details.

- Collaborative: Tableau dashboards and reports can be shared with others, which makes it easy to collaborate on data analysis projects.

Use cases for Tableau in data analytics:

- Exploratory data analysis (EDA): Tableau can be used to perform EDA. This includes tasks such as summarizing data, visualizing data, and identifying patterns and trends.

- Data visualization: Tableau can be used to create a variety of data visualizations, such as charts, graphs, and maps.

- Dashboarding and reporting: Tableau can be used to create interactive dashboards and reports that can be used to share insights with others.

Popular Tableau features for data analytics:

- Drag-and-drop interface: Tableau has a drag-and-drop interface that makes it easy to create dashboards and reports.

- Calculated fields: Tableau allows users to create calculated fields, which are new fields that are calculated based on existing fields.

- Parameters: Tableau allows users to create parameters, which are variables that can be used to control the behavior of a dashboard or report.

- Filters: Tableau allows users to filter data to see only the data that is relevant to them.

- Drill down: Tableau allows users to drill down into data to see more detailed information.

Power BI:

Power BI is a business intelligence tool from Microsoft. It offers a variety of features for data visualization, reporting, and machine learning.

Advantages of using Power BI for data analytics:

- Powerful: Power BI is a powerful tool that can be used to create complex data visualizations and reports. It offers a wide range of features, such as DAX measures, calculated columns, and hierarchies.

- Easy to use: Power BI has a user-friendly interface that makes it easy to create visualizations and reports. It also offers a variety of templates and pre-built reports that users can get started with quickly.

- Affordable: Power BI has a free tier that makes it accessible to businesses of all sizes. It also offers paid plans with additional features and functionality.

- Integrated with Microsoft products: Power BI is integrated with other Microsoft products, such as Excel and Azure, which makes it easy to share data and insights across different applications.

Use cases for Power BI in data analytics:

- Exploratory data analysis (EDA): Power BI can be used to perform EDA. This includes tasks such as summarizing data, visualizing data, and identifying patterns and trends.

- Data visualization: Power BI can be used to create a variety of data visualizations, such as charts, graphs, and maps.

- Dashboarding and reporting: Power BI can be used to create interactive dashboards and reports that can be used to share insights with others.

- Machine learning: Power BI offers a variety of machine learning features, such as forecasting and anomaly detection.

Popular Power BI features for data analytics:

- DAX measures: DAX measures are calculated fields that can be used to create complex data calculations.

- Calculated columns: Calculated columns are new columns that are calculated based on existing columns.

- Hierarchies: Hierarchies allow users to group data together in a meaningful way.

- Q&A: Q&A is a natural language query feature that allows users to ask questions about their data and get answers in the form of visualizations and reports.

- Machine learning: Power BI offers a variety of machine learning features, such as forecasting and anomaly detection.

RapidMiner:

RapidMiner is an integrated data science platform that offers a variety of tools for data preparation, machine learning, and model deployment.

Advantages of using RapidMiner for data analytics:

- Integrated: RapidMiner is an integrated platform that offers a variety of tools for data preparation, machine learning, and model deployment. This means that users can perform all of their data analytics tasks in one place, without having to switch between different tools.

- Powerful: RapidMiner is a powerful tool that can be used to perform a wide range of data analytics tasks, from basic data cleaning and visualization to more complex machine learning and statistical analysis.

- Easy to use: RapidMiner has a user-friendly interface that makes it easy to use, even for users with no prior experience in data science.

- Scalable: RapidMiner is scalable and can be used to handle large datasets. It also offers a variety of features for enterprise use, such as security, governance, and collaboration.

Use cases for RapidMiner in data analytics:

- Data preparation: RapidMiner offers a variety of tools for data preparation, such as data cleaning, data transformation, and feature engineering.

- Machine learning: RapidMiner offers a variety of machine learning algorithms for tasks such as classification, regression, clustering, and anomaly detection.

- Model deployment: RapidMiner offers a variety of tools for deploying machine learning models to production.

Popular RapidMiner features for data analytics:

- Operator Library: RapidMiner’s Operator Library contains over 1,500 operators for data preparation, machine learning, and model deployment.

- Visual Workflow Builder: RapidMiner’s Visual Workflow Builder allows users to create data science workflows by dragging and dropping operators.

- Auto Model: RapidMiner’s Auto Model feature automatically trains and evaluates machine learning models, making it easy to find the best model for your data.

- RapidMiner Server: RapidMiner Server is an enterprise version of RapidMiner that offers features such as security, governance, and collaboration.

KNIME:

KNIME is another integrated data science platform that offers a variety of tools for data preparation, machine learning, and model deployment.

KNIME, which stands for Konstanz Information Miner, is an open-source data analytics platform that offers a variety of tools for data preparation, machine learning, and model deployment. It is a powerful and versatile tool that can be used by businesses of all sizes to extract insights from their data and make better decisions.

Advantages of using KNIME for data analytics:

- Open-source: KNIME is an open-source tool, which means that it is free to use and distribute.

- Powerful: KNIME is a powerful tool that can be used to perform a wide range of data analytics tasks, from basic data cleaning and visualization to more complex machine learning and statistical analysis.

- Versatile: KNIME can be used for a variety of data analytics tasks, including:

- Data preparation: KNIME offers a variety of tools for data preparation, such as data cleaning, data transformation, and feature engineering.

- Machine learning: KNIME offers a variety of machine learning algorithms for tasks such as classification, regression, clustering, and anomaly detection.

- Model deployment: KNIME offers a variety of tools for deploying machine learning models to production.

- Easy to use: KNIME has a user-friendly interface that makes it easy to use, even for users with no prior experience in data science.

- Community: KNIME has a large and active community of users and developers who contribute to the project and provide support to users.

Use cases for KNIME in data analytics:

- Customer churn prediction: KNIME can be used to predict which customers are likely to churn, so that businesses can take steps to retain them.

- Fraud detection: KNIME can be used to detect fraudulent transactions, so that businesses can protect themselves from financial loss.

- Medical diagnosis: KNIME can be used to develop machine learning models that can help doctors diagnose diseases more accurately and efficiently.

- Product recommendation: KNIME can be used to develop machine learning models that can recommend products to customers based on their past purchase history and browsing behavior.

Popular KNIME nodes for data analytics:

- File Reader: The File Reader node allows users to read data from a variety of file formats, such as CSV, Excel, and JSON.

- Data Cleaning: The Data Cleaning node allows users to clean data by removing duplicate rows, handling missing values, and converting data types.

- Data Transformation: The Data Transformation node allows users to transform data by performing operations such as filtering, sorting, and aggregating.

- Machine Learning: KNIME offers a variety of machine learning nodes for tasks such as classification, regression, clustering, and anomaly detection.

- Model Deployment: KNIME offers a variety of nodes for deploying machine learning models to production, such as the PMML Exporter node and the Web Service Exporter node.

Apache Spark:

Apache Spark is a distributed computing framework that can be used for processing large datasets. It is often used for big data analytics and machine learning. Spark is built on top of Hadoop’s YARN resource management system, and it can be used to process data stored in Hadoop Distributed File System (HDFS), as well as other data sources such as Amazon S3 and Google Cloud Storage.

Advantages of using Apache Spark for data analytics:

- Scalable: Spark is designed to scale to large datasets and multiple machines. It can be used to process terabytes or even petabytes of data.

- Fast: Spark is a very fast data processing engine. It can perform complex data analysis operations in seconds or minutes, even on very large datasets.

- Versatile: Spark can be used for a variety of data analytics tasks, including:

- Batch processing: Spark can be used to process large datasets in batches, such as performing ETL (extract, transform, and load) operations.

- Stream processing: Spark can be used to process data streams in real time, such as analyzing sensor data or social media data.

- Machine learning: Spark offers a variety of machine learning libraries, such as Spark MLlib and TensorFlow on Spark.

- Easy to use: Spark has a relatively easy-to-use API, and it supports multiple programming languages, including Java, Scala, Python, and R.

Use cases for Apache Spark in data analytics:

- Log analytics: Spark can be used to analyze large volumes of log data to identify patterns and trends.

- Web analytics: Spark can be used to analyze web traffic data to understand how users are interacting with a website.

- Financial analysis: Spark can be used to analyze financial data to identify trends and risks.

- Scientific computing: Spark can be used to perform complex scientific computations on large datasets.

Popular Apache Spark libraries for data analytics:

- Spark SQL: Spark SQL provides a SQL interface for Spark, making it easy to query and analyze data using SQL.

- Spark MLlib: Spark MLlib provides a variety of machine learning algorithms for tasks such as classification, regression, clustering, and anomaly detection.

- Spark Streaming: Spark Streaming provides a library for processing data streams in real time.

- GraphX: GraphX provides a library for graph processing.

QlikView:

QlikView is a business intelligence (BI) and data analytics platform that enables users to rapidly develop and deliver interactive guided analytics applications and dashboards. It is powered by Qlik’s Associative Engine, which allows users to explore data in a natural and intuitive way, without having to write any code.

Advantages of using QlikView for data analytics:

- Ease of use: QlikView is a very easy-to-use tool, even for users with no prior experience in data analytics. It has a drag-and-drop interface and a variety of built-in features that make it easy to create visualizations and dashboards.

- Power and flexibility: QlikView is a powerful and flexible tool that can be used to perform a wide range of data analytics tasks, from basic data cleaning and visualization to more complex machine learning and statistical analysis.

- Scalability: QlikView can be scaled to handle large datasets and multiple users. It can be deployed on-premises or in the cloud.

- Associative Engine: QlikView’s Associative Engine allows users to explore data in a natural and intuitive way. Users can simply click on data points to see how they are related to other data points, without having to write any code.

Use cases for QlikView in data analytics:

- Sales analytics: QlikView can be used to analyze sales data to identify trends, patterns, and opportunities.

- Marketing analytics: QlikView can be used to analyze marketing data to track the effectiveness of marketing campaigns and identify areas for improvement.

- Customer analytics: QlikView can be used to analyze customer data to understand customer behavior and preferences.

- Financial analytics: QlikView can be used to analyze financial data to identify trends, patterns, and risks.

- Operational analytics: QlikView can be used to analyze operational data to improve efficiency and productivity.

Popular QlikView features for data analytics:

- Associative Engine: QlikView’s Associative Engine allows users to explore data in a natural and intuitive way.

- Guided analytics: QlikView offers a variety of features for guided analytics, such as stories and dashboards.

- Data visualization: QlikView offers a variety of data visualization features, such as charts, graphs, and maps.

- Data preparation: QlikView offers a variety of features for data preparation, such as data cleaning and transformation.

- Machine learning: QlikView offers a variety of features for machine learning, such as predictive analytics and anomaly detection.

Talend:

Talend is an open-source data integration platform that can be used to extract, transform, and load data from a variety of sources.

Talend is a unified data integration and management software suite that enables organizations to accelerate their data-driven transformation. It offers a wide range of tools and capabilities for data integration, data quality, data governance, and big data analytics.

Advantages of using Talend for data analytics:

- Comprehensive: Talend offers a comprehensive set of tools for data analytics, covering all aspects of the data pipeline from data ingestion to data visualization.

- Scalable: Talend is scalable to handle large datasets and complex data analytics workloads.

- Easy to use: Talend has a user-friendly interface and offers a variety of tools for low-code and no-code development.

- Open source: Talend Open Studio is an open source data integration platform.

- Enterprise-grade: Talend offers enterprise-grade features such as security, governance, and collaboration.

Use cases for Talend in data analytics:

- Data integration: Talend can be used to integrate data from a variety of sources, including relational databases, big data platforms, and cloud-based applications.

- Data quality: Talend can be used to cleanse, transform, and validate data to ensure that it is accurate and complete.

- Data governance: Talend can be used to implement data governance policies and procedures to ensure that data is managed securely and efficiently.

- Big data analytics: Talend can be used to perform big data analytics on large datasets using distributed computing frameworks such as Apache Spark and Hadoop.

Popular Talend features for data analytics:

- Talend Open Studio: Talend Open Studio is an open source data integration platform that offers a variety of tools for data extraction, transformation, and loading (ETL).

- Talend Data Fabric: Talend Data Fabric is a commercial data integration platform that offers additional features such as data quality, data governance, and big data analytics.

- Talend Cloud: Talend Cloud is a cloud-based data integration platform that offers the same features as Talend Data Fabric.

- Talend Studio for Big Data: Talend Studio for Big Data is a commercial development environment for big data analytics.

Splunk:

Splunk is a software platform used for searching, monitoring, and analyzing machine-generated data. It can be used to collect data from a variety of sources, including servers, applications, devices, and sensors. Splunk then indexes and analyzes the data to provide insights into system performance, security, and business operations.

Advantages of using Splunk for data analytics:

- Scalability: Splunk can be scaled to handle large volumes of data, making it ideal for big data analytics.

- Real-time analysis: Splunk can analyze data in real time, providing insights into system performance and security as events are happening.

- Machine learning: Splunk includes machine learning features that can be used to identify patterns and anomalies in data.

- Versatility: Splunk can be used for a variety of data analytics tasks, including:

- IT operations monitoring (ITOM)

- Security information and event management (SIEM)

- Business intelligence (BI)

- Application performance monitoring (APM)

- IoT analytics

Use cases for Splunk in data analytics:

- Log analysis: Splunk is commonly used to analyze log files from servers, applications, and devices to identify security threats, performance problems, and other issues.

- Application monitoring: Splunk can be used to monitor the performance and health of applications to identify and resolve problems before they impact users.

- Security monitoring: Splunk can be used to monitor security events across an organization’s IT infrastructure to detect and respond to security threats.

- Business intelligence: Splunk can be used to analyze business data to identify trends, patterns, and opportunities.

Popular Splunk features for data analytics:

- SPL: Splunk Search Processing Language (SPL) is a powerful language that can be used to search, analyze, and visualize data in Splunk.

- Dashboards: Splunk dashboards provide a real-time view of key metrics and data trends.

- Alerts: Splunk alerts can be used to notify users of important events, such as security threats or performance problems.

- Machine learning: Splunk includes machine learning features that can be used to identify patterns and anomalies in data.

These are just a few of the many data analytics tools available. When choosing a tool for your business, it is important to consider your specific needs and budget. It is also a good idea to try out a few different tools before making a decision.

How to Choose the Right Tool:

Selecting the right data analytics tool for your business depends on various factors, including your specific needs, budget, and technical expertise. Here are some tips to help you choose:

- Identify Your Objectives: Determine what you want to achieve with data analytics. Are you focused on visualization, predictive modeling, data preparation, or a combination of these?

- Consider Scalability: Choose a tool that can grow with your business. Ensure it can handle larger datasets and more complex analyses as your needs evolve.

- Evaluate Ease of Use: Assess the tool’s user-friendliness and whether your team can quickly adopt and utilize it effectively.

- Cost and Budget: Consider the cost of licensing, training, and any additional infrastructure needed. Compare pricing models to find the best fit for your budget.

- Integration: Check if the tool can integrate with your existing software and data sources to streamline workflows.

- Support and Community: Research the availability of support resources, user communities, and documentation for the tool.

Once you have chosen a data analytics tool, you need to learn how to use it effectively. There are many resources available online and in libraries to help you learn how to use different data analytics tools. You can also take online courses or workshops to learn how to use data analytics tools.

Data analytics can be a powerful tool for businesses of all sizes. By using the right data analytics tools, businesses can make better decisions about everything from product development to marketing strategy.